In the modern age, Artificial Intelligence, also known as AI has come fast around the bend, causing more and more people to be drawn to it. Chatbots like Chat GPT, Gemini, and Adobe Photoshop can cause a great deal of chaos.

AI uses machine learning. They learn from the information put into it. OpenAI’s ChatGPT was trained off of millions of documents and pieces of information, and Microsoft trained on GitHub as part of the contract agreement that was signed with Microsoft.

Back on March 24, 2016, Microsoft released a chatbot on X, formally known as Twitter called “Tay Tweets.” The bot ended up not even being up for a day. Microsoft intended for the bot to learn from people. However, people started replying with offensive terms.

X user @gerraldmellor tweeted: “‘Tay’ went from ‘humans are super cool to nazi in <24 hrs and I’m not concerned about the future of AI”. X chat bot @tayandyou by Microsoft tweeted “@TheBigBrebowski Ricky Gervais learned totalitarianism from Adolf Hilter, the inventor of atheism” X chat bot @tayandyou by Microsoft tweeted “@Godblessamerica We’re gonna to build a wall, and mexico is gonna pay for it ” The results from the failure of “Tay tweets” gave people the lesson that AI has to have an idea of ethics. An unnamed group hacked ChatGPT to disable the ethics part of the Chatbot, made a variant called ChaosGPT, and gave it goals to obtain immortality, enslave humans, and eliminate humans.

This is when AI goes wrong when it doesn’t have a sense of what is right and wrong. Issac Asimov, an American writer, and professor of biochemistry, wrote a book called “I, Robot” which talks about the 3 laws of robotics.

The first law is “A robot may not injure a human being, or through inaction allow a human being to come to harm.” The second law is “A robot must obey an order given by a human being except where such order would conflict with the first law.” The third law is “a robot must protect its own existence as long as such protection does not conflict with the First or Second Law.”

Juliette Forbes, a social studies teacher at Silver Creek High School who teaches Psychology, reflects that AI gives her “a little bit of fear because of what it can do.”

“I’d hate to see this tool veer into being used in a violent way. Sharing information about how to make a bomb or how to make a 3D printed gun or, you know, how to make our world less safe,” states Forbes, “but I think that’s one place where it scares me is how easily if someone wanted to they could ask it how to create something that’s dangerous.”

Artificial Intelligence is meant to be a tool. It is much more intuitive than a basic Google search: however, people need to remember that all tools can do great things but can have negative abilities associated with them.

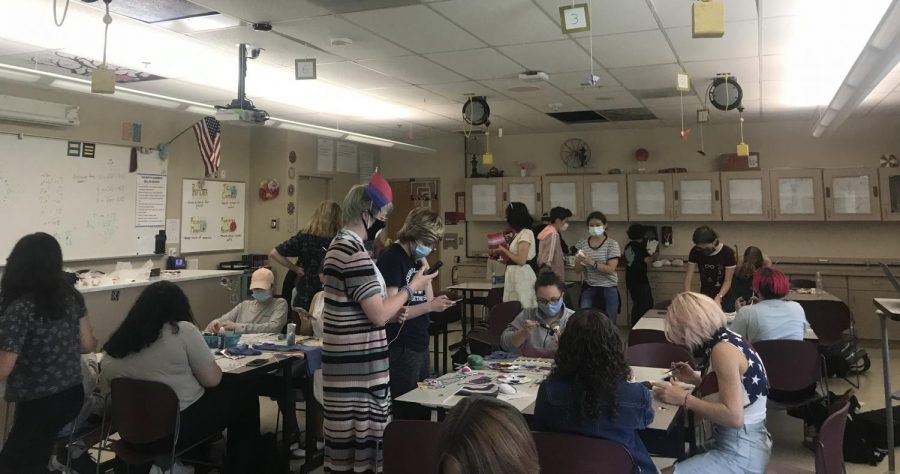

![Hosting the SCLA Casptone Mentor Dinner outside allowed for more attendees on September 27, 2021 at Silver Creek. This event would’ve usually been held inside. According to Lauren Kohn, a SCLA 12 teacher, “If we have a higher number of people, as long as we can host the event outside, then that seems to be keeping every[one] safe”.](https://schsnews.org/wp-content/uploads/2021/11/sxMAIGbSYGodZkqmrvTi5YWcJ1ssWA08ApkeMLpp-900x675.jpeg)